Publications

You can also find my articles on my Google Scholar profile.

Benyamin Tabarsi, Aditya Basarkar, Xukun Liu, Dongkuan Xu, Tiffany Barnes, 2024

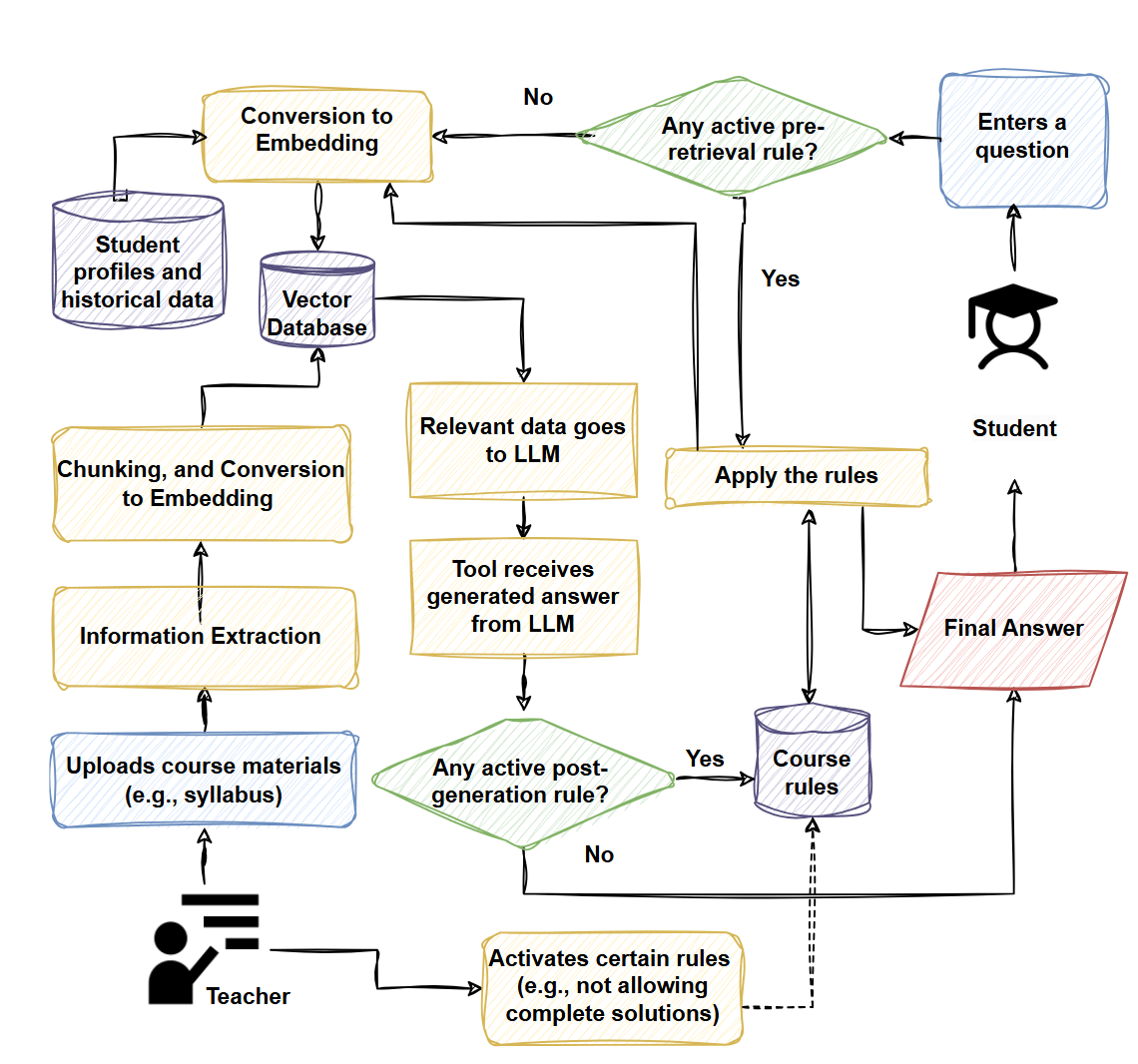

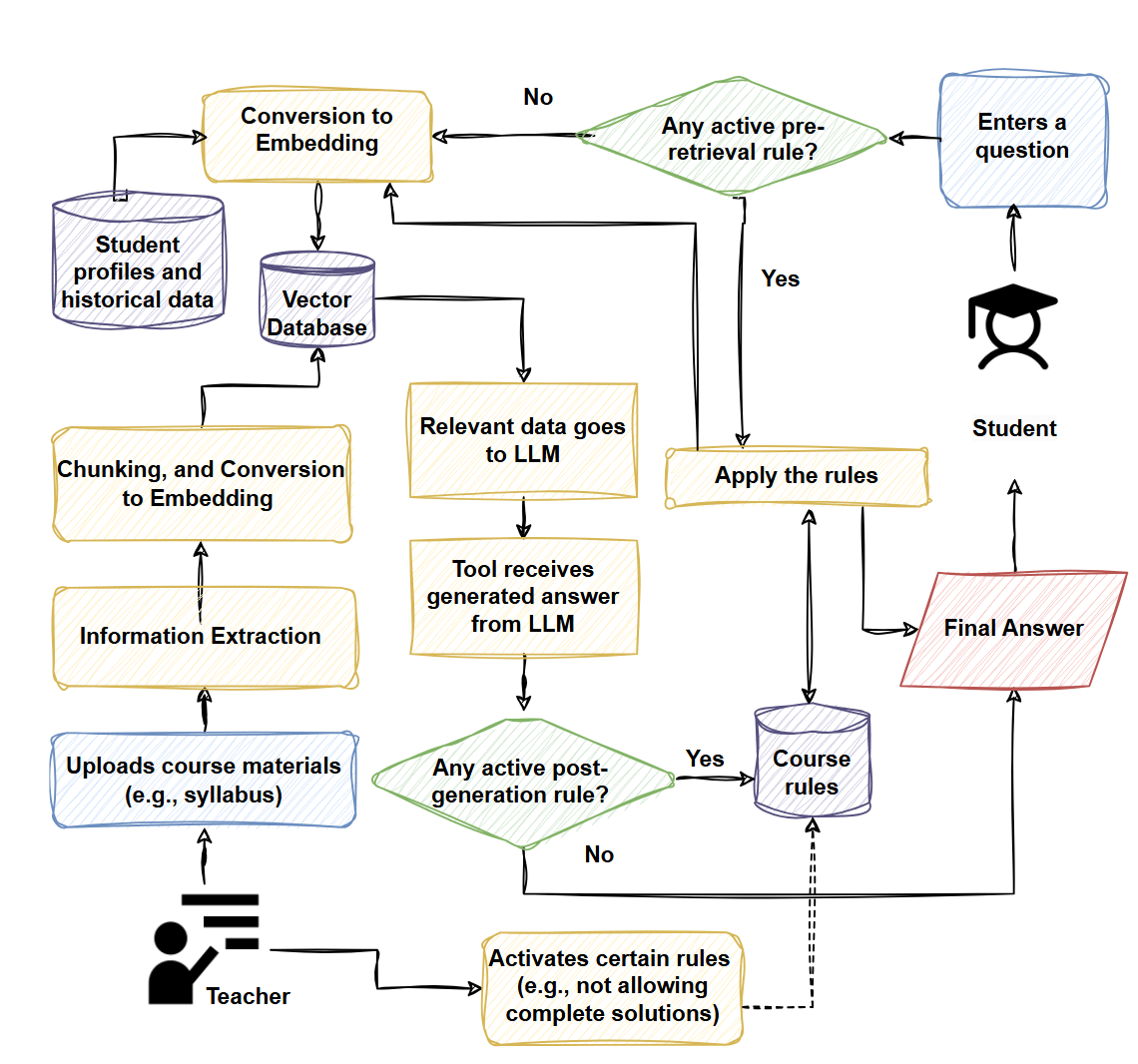

The potential of Large Language Models (LLMs) in education is not trivial, but concerns about academic misconduct, misinformation, and overreliance limit their adoption. To address these issues, we introduce MerryQuery, an AI-powered educational assistant using Retrieval-Augmented Generation (RAG), to provide contextually relevant, course-specific responses. MerryQuery features guided dialogues and source citation to ensure trust and improve student learning. Additionally, it enables instructors to monitor student interactions, customize response granularity, and input multimodal materials without compromising data fidelity. By meeting both student and instructor needs, MerryQuery offers a responsible way to integrate LLMs into educational settings.

Xukun Liu, Bowen Lie, Ruqi Zhang, Dongkuan Xu, 2024

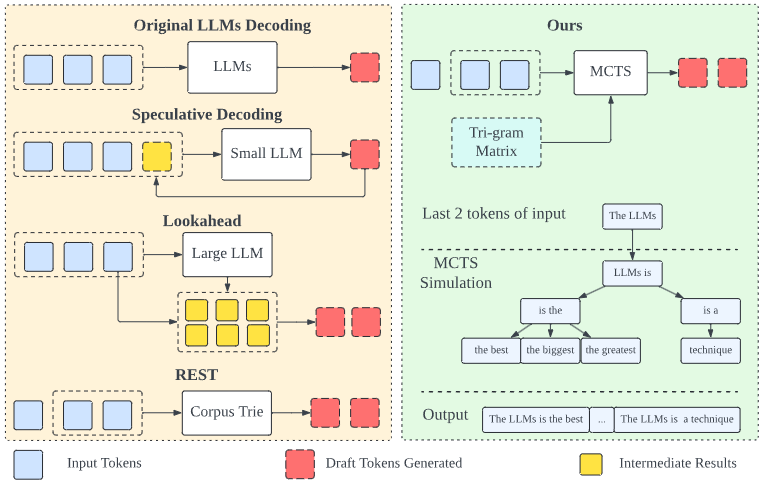

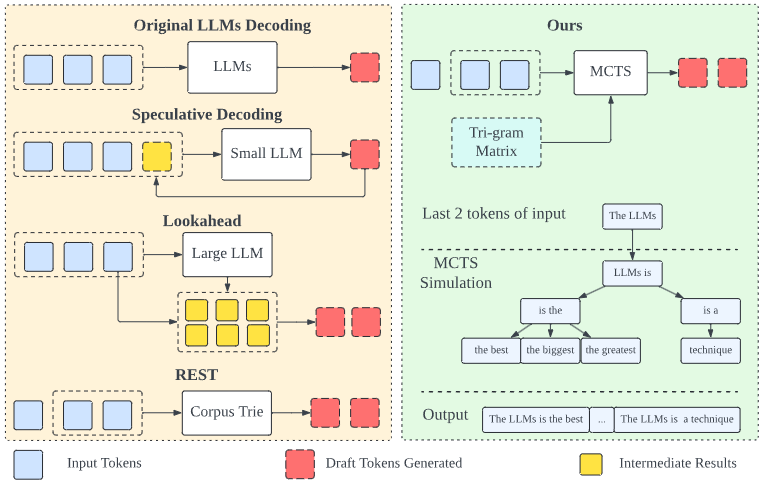

We introduce an LLM decoding acceleration method that requires no fine-tuning. Our approach involves an adaptive draft-verification process that evolves over time to improve efficiency. We utilize a tri-gram matrixbased LLM representation to dynamically approximate the output distribution of the LLM, allowing the model to adjust to changing token probabilities during the decoding process.

Download here

Dong Shu, Haoran Zhao, Xukun Liu, David Demeter, Mengnan Du, Yongfeng Zhang, 2024

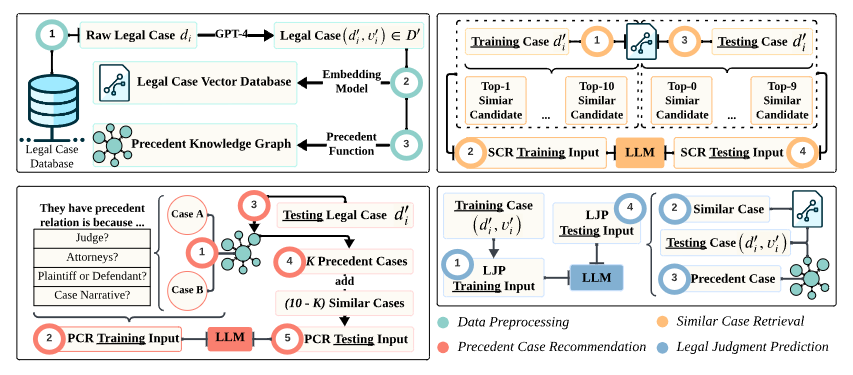

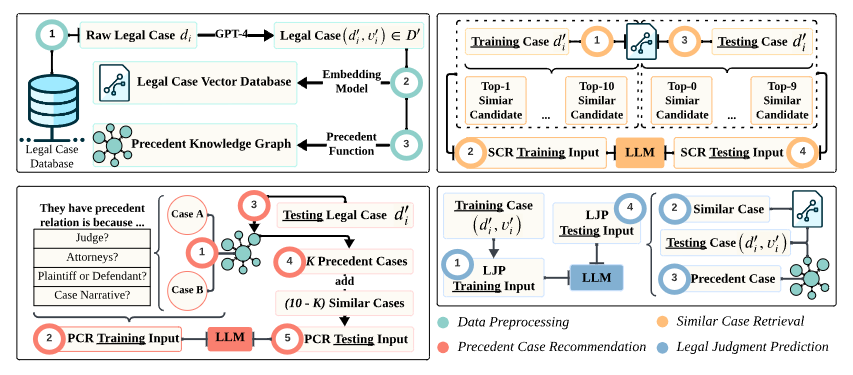

We introduce LawLLM, a multi-task model designed for the US legal domain, excelling in Similar Case Retrieval (SCR), Precedent Case Recommendation (PCR), and Legal Judgment Prediction (LJP). LawLLM distinguishes between similar and precedent cases, providing clarity for future research. We apply customized data preprocessing, in-context learning, and advanced information retrieval methods to transform raw legal data into a trainable format. LawLLM outperforms existing baselines in zero-shot and few-shot scenarios, addressing key challenges in legal analytics.

Download here

Xukun Liu, Zhiyuan Peng, DK Xu, 2024

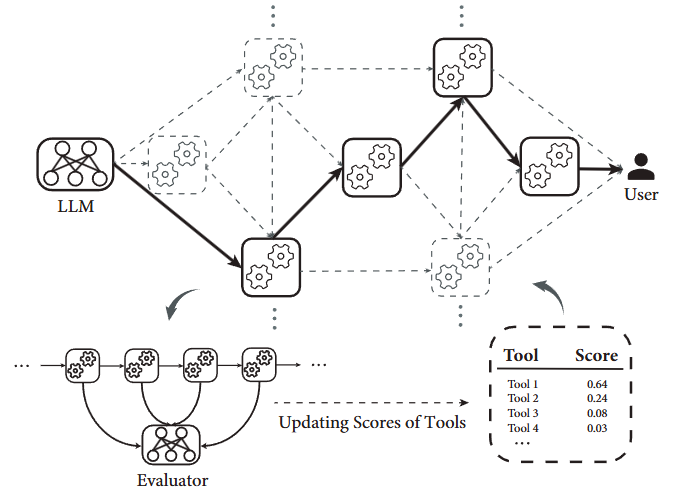

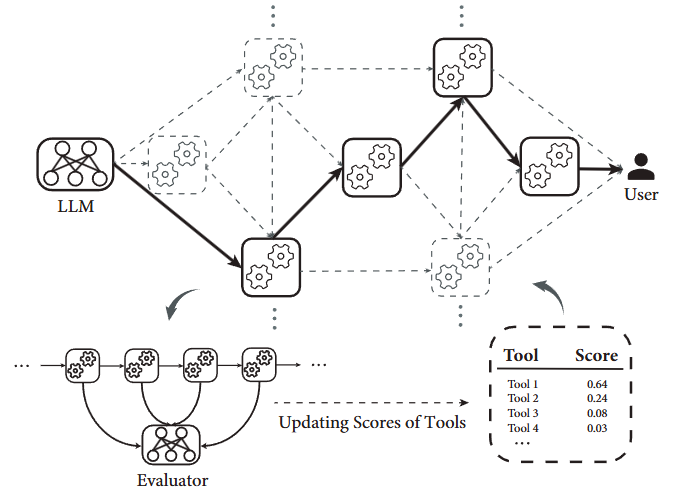

We introduce ToolNet, a plug-and-play method to assists LLMs in handling massive tools. ToolNet organizes tools in a weighted directed graph (node represents tools and edges denote tool transition) based on the tool-use trajectories produced by LLMs. An LLM navigates in the graph by iteratively choosing the next one from its successors until the task is resolved. Graphs are updated online, enabling adjustment to accommodate the frequent updates of tools and new tasks.

Download here

Binfeng Xu, Xukun Liu, et al., 2023

Gentopia is a lightweight and extensible framework for LLM-driven Agents and ALM research. It provides essential components to build, test and evaluate agents. At its core, Gentopia aims to assemble an agent with a single config, thus minimizing your effort in building, tuning, and sharing agents.

Download here